December Science Team Call

I thought for this week’s blog post, I would talk a bit more about what happened in last week’s science team call and what we’ve been up to over the past month or so. Last month was the Division of Planetary Sciences meeting where for the first time, Michael, Candy, Anya, and I have been in a room together. So we had dinner and went through the paper draft and what’s left to do in terms of getting the paper out. Michael’s poster at the meeting showed the first results of the full pipeline run identifying fans from blotches. So the results of that was also discussed during our meeting.

Most of the time we rely on email and team calls to work together. Right now every two weeks we have an hour call between Michael, Anya, Candy and myself. I think they’ve been really important in helping us work together over such long distances as I’m based in Taipei, Candy is based in Utah, and Michael and Anya live in Colorado. It’s our chance to update each other, talk over issues and stumbling blocks, and talk about the data.

Michael’s been hard at work this year getting all the pieces in place to make the final catalog for Season 1 and Season 2. Overall the software pipeline Michael has built works incredibly well, but we still need to check all the edge cases where we might need to tweak the process. I took the task of going through and checking a subset of subjects with the identified fans and blotches plotted compared to the individual volunteer markings to look for any anomalies or issues with the clustering pipeline. The algorithm Michael’s developed takes your classifications and combines them together to identify where there are fans and blotches and based on how many people used which tool (fan or blotch) we determine if the dark region is a fan or a blotch. I did this review shortly after DPS meeting, and Michael and I filled in the Candy and Anya during the call.

Michael’s now working on implementing some changes to his pipeline, and we’ll take a look at those result soon. Now that we’ve got this first pass from the full pipeline, we can start building the codes to make the plots we want for the first paper and look at the distributions of fans and blotches over time and across the different target regions in Seasons 2 and 3. We spent a good chunk of this month’s call talking about what plots would be the most diagnostic. We also talked about the strategy we wanted to use to compare images with different binning/image resolutions. Anya and Michael are going to work on that over the coming weeks. I’ve got some tasks assigned for the next call as well, including using Michael’s catalog to compare to the gold standard dataset the science team generated.

We also talked about the new text Michael wrote in the paper draft, and set a deadline for the rest of us to read it and give back comments. The next full team call will be in early/mid January. We’re getting closer and closer to having a complete Planet Four science paper ready to submit to a journal.It’s nice to see the progress and watch everything coming together. Thanks for your continued clicks. The hardest part is getting the pipeline complete. Once we have this huge step completed, it means we can rapidly produce catalogs for the entire Planet Four classification database and start working on comparing the mapped observations from 4 Mars years of Manhattan and Inca City that you’ve marked. I’m really looking forward to seeing what we learn from that.

Making Tables

A quick update on the first paper. We’re getting closer to having the final clustering procedure nailed down. Once we’ve got that, we can make the final catalog of markings from Season 2 and Season 3 from the millions of classifications we have gathered over the past few years. While Michael has been working on that, I’ve been working on some of the other tables we need to include for the paper. We know what we’ve done and have good knowledge of the HiRISE data, but we need to make sure it’s clear in the paper so that any researcher or reader has all the information needed to use the catalog of fans and blotches we’re generating thanks to your clicks. To do that we’re going to put in a table that summarizes all the relevant info about the HiIRSE observations from Seasons 2 and Season 3.

The easiest way I found to do this is to grab this information from the headers of the reduced single HiRISE images non-map projected images with the spacecraft pointing information that we created from the raw HiRISE observations. The HiRISE team reduction pipeline produces the three color band mosaics that we dice up and show on http://www.planetfour.org but since they don’t include the spacecraft information we had to build a single filter version ourselves where we added a few steps to the process that would allow the information needed to get the location on the South pole and the spacecraft information into the image headers. This is what Chuhong worked on last summer.

So I took a script written by Gauri adapted from Chuhong’s code to get the other relevant info like imaging scale, north azimuth, solar longitude from the single filter image headers and stick it in a mysql (a database interface language/setup) table.Then I spent a bit of time writing a code to read the table I created and output it in the format needed for the paper. We’re writing the paper in a format known as LaTeX. I spent an afternoon getting the format correct so that table file would compile. You can glimpse part of the results (the first few lines of the multi-page table) below. I still need to reduce the number of decimal places outputted in certain columns, but the basic information is there. We’ll be including a version of this table in the paper text or supplementary material.

Boulders and a Planet Four Summer

Today we have the last post from Gauri Sharma who is spent her summer working on Planet Four as part of the ASIAA Summer Student Program. Gauri gave a talk at the end of August detailing her work with boulders and developing a pipeline to find the same position in one Planet Four image in others shown on the site. Below Gauri presents her talk slides and her project. Thanks Gauri for all your help this Summer!

I am gonna introduce you some of the features found on Mars’ South Pole and tools used to study these features. I will also tell quick logical science behind those features according my research in these last two months.

This slide tells you how Mars similar and differ from the Earth.

During the winter on South pole a, CO2 ice slab forms over the pole is nearly translucent and ~1m thick. When the ice slab forms, it comprises of frozen carbon dioxide and dust and dirt from the atmosphere. Below the ice sheet is layer of dust and dirt.

When the spring comes sunlight penetrates the CO2 ice slab, and the base of the ice cap gets heated. The temperature of the ice at the base increases causes CO2 sublimation. Sublimation of CO2 creates a trapped pressurized gas bubble beneath the ice layers, These beneath pressurized gas bubbles continuously pushes the upper layers of ice and at one point ice slabs get crack and pressurized gases vent out. A jet like eruption or geyser takes place. It is thought that material (dirt and dust) from below the ice sheet which has been taken by pressurized gas is brought up the surface of the ice sheet and is blown by the surface wind into a fan shape.

It is prediction, just after eruption Geysers supposed to look like this.

When there is enough wind on surface to blown the geysers material fans appears on surface and surface looks like fig1.

If wind is not much effective or not blown then geysers material deposits near the geyser source and a black spot appears on surface called blotches (shown in fig2).

Also during the spring and summer when the geysers are active, the trapped carbon dioxide gas before it breaks out from under the ice sheet is though to slowly remove material and carve channels in the dirt surface. In the mid summer when CO2 fully get vaporized channels are empty cracks. This is annual process of over time produces erosion on surface and channel network looks like spiders (or their official name araneiform).

In every spring and summer season, hundreds of thousands of fans wax and wanes on the Martian South Pole. These features have been captured by then HiRISE camera. HiRISE camera is onboard on Mars reconnaissance orbiter since 2005.

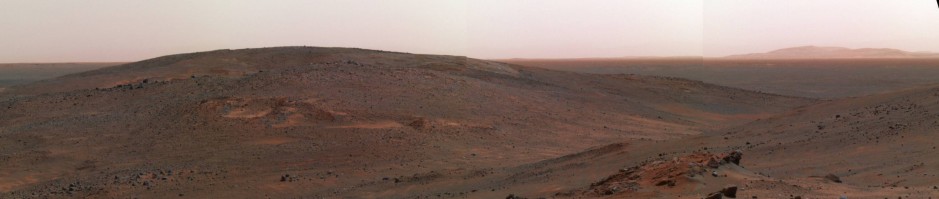

These are the sample images captured by HiRISE camera.

During analyzing of these images, scientists found difficulties, Automated computer routines have not been able to accurately identify and outline the individual feature. But scientists thought a human eye eventually can distinguish and outline these features and shape them. So, A group of scientists created Planet four website purposefully for research on Mars by public help.

This is how, Planet Four website looks like. Working with Planet Four is very easy, just sign up in Planet Four website and take in part of classify shapes on surface. Before getting started they provides a short intro to let you know “How to mark, and useful tools to classify features”. Volunteer classification are collected together and researchers combines these classifications (markings), and they found these markings produces an extremely reliable, fruitful results about features founds on Mars surface.

In slide 10, we seen Planet four images looks different from real images taken by HiRISE camera. Since HiRISE image is huge in size. So for proper analysis and accurate outlining Planet Four team made sub images of HiRISE images and kept them in Planet Four website, we call them tiles.

For my project Planet Four was one of the most important tool. I use Planet Four tiles to examine boulders. Boulders are one of the more interesting objects on Mars surface, and in the South Pole regions monitored by HiRISE only one area seems to have boulders. This region has been dubbed ‘Inca City.’ The boulders in Inca City are likely impact produced. Boulders are Interesting because we think they can help be a heat source of geysers formation. I am looking at how fans are associated with boulders more often than not and has been captured by HiRISE. I studied how surrounding of boulders changes time by time, are they really takes part as the source of geyser formation”.

I chooses some 35 tiles those contains BOULDERS and marked all BOULDERS (shown in fig2). After marking Planet Four provides a makings csv file. That contain your marked BOULDER x and y position and corresponding tile name.

To analysis surrounding of BOULDERS over a time, I need to search over the HiRISE 5year database with the help of markings csv received by Planet Four. Since HiRISE database contain more than 1 lacks tiles. Doing search manually and find useful data and then calculate information, collect belonging files and group them for looking yearly changes seems terrible.

So for this purpose I created a pipeline that can do all this in seconds. This is a very powerful pipeline that Planet Four team doesn’t have before me.

These are the results of my pipelines.

Images in blue box clearly shows, During a season as the month changes surrounding of boulders changes (fans wax and wanes) boulders gets covered with fans material and in next season again boulder start visible.,,,

A Planet Four Science Poster

ASIAA, my institute in Taiwan had its 5 year external review where a panel of experts in the field from outside the institute come in and give a critique and highlight both the positive things that are going well and also the potential areas to be strengthened. At this review there was a poster session for postdocs and other researchers to present their projects. Last week was the poster session. I gave an update on Planet Four and presented Planet Four: Terrains. I thought I’d share (typos and all) the poster with you. You might find that some of the figures are familiar and that you’ve seen them on this very blog in one form or another.

Summer Student 2015 Update: Coding with Loading

Today we have a post from Gauri Sharma who will be working on Planet Four this summer as part of the ASIAA Summer Student Program.

So the second week has passed in the ASIAA Summer program. I would like to call this week “coding with loading.” This past week, I played with python. Don’t be scared, I will reiterate for you again it is not a snake, it’s a kind of language like java, C, C++ etc.

As the week was running likewise my work was also running. On the 3rd day of week, my supervisor said “let’s go and have some drink”. It sounds so awesome right!, I was thinking: ” Yipee! I am going to spend some time with my supervisor “. But beside all this imagination, I am gonna tell you truth. No doubt that was the wow time but along with that I got full week tasks. And finally I got all those tasks, that what I wanted to get completed. This is called ‘loading.’ Now time to talk about coding…

On 17 July, again meeting with Meg, and fully working on debugging my code. Oh! My God, my code!! full of errors. She spent almost 2 hours with me. In between more than two time My Mind said: ” Gauri Sharma you gone, she is gonna kick you in few mins, you wasted this much of her time”. I was literally soo scared. But we got through the debugging (and she didn’t kick me).

Before I tell, what’s the use of my code. I would like to let you know some key points like HiRISE image and Planet Four images. HiRISE images: High Resolution Imaging Science Experiment (HiRISE) is a camera on board the Mars Reconnaissance Orbiter , which allows it to take pictures of Mars with resolutions of 0.3 m/pixel. So that, image is so big that HiRISE images are diced into tiles (Planet Four images) that are shown on the Planet Four website that you classify. Right now, I am working to correlate Planet Four images to full HiRISE images, so I can easily find out a particular interesting area in the larger HiRISE image. So now I can tell you, my code works by converting Planet Four image (x, y) position into HiRISE_image (x, y) pixel position.

There is a happy ending, my first master code is working. And as usual Meg always makes me happy and her line ” you are making progress ” always left a pretty smile on my face and helps me keep calm and cool in such a HOT Summer of Taipei.

Then, I moved forwarded for new task, I got in my loading season .This new code has taken much more time then expected, but it is finally done. It works by “converting corners of Planet Four image (x, y) position into corners of HiRISE image (x, y) pixel position. So on Monday, I am ready with my second master code. I gather so much python tricks, finally I am enjoying with them. One thing , I would like to say for coding, “its awesome!, its kind of magic!!!!”

That’s all for this week. See you next week.

Why we need Planet Four: Terrains?

Hi there!

I want to talk why we created the new project Planet Four: Terrains if we have Planet Four already.

The very high resolution images of HiRISE camera are really impressive and one might think that there is no reason to use a camera with lower resolution anymore. Wrong!

First, high resolution of HiRISE image means large data volume. To store on-board and to download large data from MRO spacecraft to Earth is slow (and expensive) and this means we are always limited in the number of images HiRISE can take. We will never cover the whole surface of Mars with the best HiRISE images. Sadly. so we use different cameras for it. Some – with very rough resolution and some – intermediate, like context camera (CTX). We can use CTX, for example, to gain statistics on how often one or the other terrain type appears in the polar areas. This is one point why Planet Four: Terrains is important.

Second, because HiRISE is used for targeted observations, we need to know where to point it! And we better find interesting locations to study. We can not say “let’s just image every location in the polar regions!” not only for the reason 1 above, but also because we work in a team of scientists and each of them has own interests and surely would like his/her targets to be imaged as well. We should be able to prove to our colleagues that the locations we choose are truly interesting. To show a low-resolution image and point to an unresolved interesting terrain is one of the best ways to do that. And then, when we get to see more details we will see if it is an active area and if we need to monitor it during different seasons.

Help us classify terrains visible in CTX images with Planet Four: Terrains at http://terrains.planetfour.org

Planet Four Season 2 and Season 3 Site Statistics

Are you ever curious to know how people classify on Planet Four? Well today is your day. I’m working on generating the final numbers for the first half of the Planet Four science paper in preparation. The paper is an introduction to the project and will contain the catalog of blotches and fans identified thanks to your help in Season 2 and Season 3. We’re getting closer to having the paper and the final catalog preparation in shape for submission by the end of the summer.

As part of the paper, I wrote the section that talks about the classification rate and how people classify on the site. So I made a few close-to-final plots and calculated some relevant numbers from the classification database for Season 2 and 3 that will be included in the paper so I thought I’d share them here. These values and figures below are pretty close what will be in the submitted science paper.

We had a total of 3,517,363 classifications for Seasons 2 and 3 combined. More blotches than fans were drawn, 3,483,724 blotches compared to 2,825,930 fans. With a total of 84604 unique ip addresses and registered volunteers who contributed to Planet Four when Season 2 and Season 3 titles were in rotation. Most classifiers don’t log in. There is no difference between the non-logged in and and logged-in experience on Planet Four other than that if you classify with your Zooniverse account we can then give you credit for your contributions in the acknowledgement website we’ll make for the first paper, and we can only get your name (if you allow the Zooniverse to print it to acknowledge your effort) if you classify with a Zooniverse account.

First plot shows the distribution of the classifications for each tile in Season 2 and Season 3. You can see the impact of BBC Stargazing. Most of our classifications for Season 2 and Season 3 came from the period during and the few months after BBC Stargazing live and the site was getting lots of classifications and attention so we retired titles after more classifications than now. Currently a tile needs 30 classifications before we retire it, a number that better suits our current classification rate. You can see that nearly all of the Season 2 and Season 3 have 30 classifications or more, with a range of total classifications that we have to take into account when doing the data analysis and identifying the final set of blotches and fans from your markings since some tiles will have significantly more people looking at it than others.

The next plot shows the distributions of classifications for logged-in and non-logged in (without a Zooniverse account) classifiers combined for Season 2 and Season 3. We have a way to track roughly the number of classifications a non-logged in session does so I count them as a separate ‘volunteer’ in this plot (note I cut the plot off at 100 classifications for visibility).

You can see that most people only do a few classifications and leave and there is a distribution and a tail of volunteers who do more work. That’s typical of the participation in most websites on the Internet About 80% of our classifications come from people who do more than 50 classifications, typical of many Zooniverse projects. Both the people that contribute a few clicks and those that contribute more are valuable to the project and help us identify the seasonal features on Mars. So thanks for any and all classifications you made towards Season 2 and Season 3, and if you have a moment to spare today there’s many more images waiting to be classified at http://www.planetfour.org.

Tiles and Full Frame Images

I thought I’d go into a bit more into detail about what exactly you’re seeing when you review and classify an image on Planet Four. On the main classification site we show you images from the HIRISE camera, the highest resolution camera ever sent to another planet. Looking down from the Mars Reconnaissance Orbiter, HiRISE is extremely powerful. It can resolve down to the size of a small card table on the surface of Mars. The camera is a push-broom style where it uses the motion of the spacecraft it is hitching a ride on to take the image. During the HiRISE exposure, MRO moves 3 km/s along in its pole-to-pole orbit , which creates the length of the image such that you get long skinny image in the direction of MRO’s orbit. The camera can be tilted to the surface as well, which can enable stereo imaging.

The HiRISE images are too big to show the full high resolution version in a web browser at full size. The classification interface wouldn’t quickly load, as these files are on the order of ~300 Mb! – way too big to email. But the other reason is that the full extent of a HiRISE full frame image is too big and zoomed-out for a human being to review and accurately see all the fan and blotches let alone map them. So to make it easier to see the surface detail and the sizes of the fans and blotches, we divide the full frame images into bite-sized 840 x 648 pixel subimages that we call tiles.

For the Season 2 and Season 3 monitoring campaign, a typical HiRISE image is associated with 36-635 tiles When you classify on the site, you’re mapping the fans and blotches in a tile. Each tile is reviewed by 30 or more independent volunteers, and we combine the classifications to identify the seasonal fans and blotches. To give some scale, for typical configurations of the HiRISE camera, a tile is approximately 321.4 m long and 416.6 m wide. The tiles are constructed so that that they overlap with their neighbors. A tile shares 100 pixels overlap in width and height with the right and bottom neighboring tiles. This makes sure we don’t miss anything in the seams between tiles .

If you ever want to see the full frame HiRISE image for a tile you classified, favorited, or just stumbled upon on Talk, there’s an easy way to do it. On the Talk page for each tile we have a link below the image called ‘View HiRISE image’ which will take you to the HiRISE team public webpage for the observation, which includes links to the full frame image we use to make tiles plus more (note= we use the color non-map projected image on Planet Four). Try out this example on Talk.

So next time you classify an image and recall how detailed it is, remember that although it’s just a small portion of the observation, your classifications are hugely important. Without them we wouldn’t be able to study and understand everything that’s happening in the HiRISE observations. It’s only with the time and energy of the Planet Four volunteer community that we are able to map at such small scales and individually identify the fans and blotches., which is crucial for the project’s science goals. So thank you for clicks!

Clustering the PlanetFour results

Our beloved PlanetFour citizen scientists have created a wealth of data that we are currently digging through. Each PlanetFour image tile is currently being retired after 30 randomly selected citizens pressed the ‘Submit’ button on it. Now, we obviously have to create software to analyze the millions of responses we have collected from the citizen scientists, and sometimes objects in the image are close to each other, just like in the lower right corner of Figure 1.

Figure 1: Original HiRISE cutout tile that is being shown to 30 random PlanetFour citizen scientists.

And, naturally, everybody’s response to what can be seen in this HiRISE image is slightly different, but fret not: this is what we want! Because the “wisdom of the crowd effect” entails that the mean value of many answers are very very close to the real answer. See Figure 2 below for an example of the markings we have received.

Note the amount of markings in the lower right, covering both individual fans that are visible in Figure 1. It is understandable that the software analyzing these markings needs to be able to distinguish what a marking was for, what visual object in the image was meant to be marked by the individual Citizen scientists. And I admit, looking at this kind of overwhelming data, I was a bit skeptical that it can be done. Which would still be fine, because one of our main goals is wind directions to be determined and as long as every subframe results in the indication of a wind direction, we have learned A LOT! But if we can disentangle these markings to show us individual fans, we could even learn more: We can count the amount of activity per image more precisely, to learn how ‘active’ this area is. And we even can learn about changes of wind direction happening, if at the same source of activity two different wind directions can be distinguished. For that, we need to be able to separate these markings as good as possible.

And we are very glad to tell you that that indeed seems possible, using modern data analysis techniques called “clustering” that looks at relationships between data points and how they can be combined into more meaningful statements. Specifically, we are using the so called “DBSCAN” clustering algorithm (LINK), which allows us to choose the number of markings required to be defined a cluster family and the maximum of distance allowed for a different marking before being ‘rejected’ from that cluster family. Once the cluster members have been determined, simple mean values of all marking parameters are taken to determine the resulting marking, and Figure 3 shows the results of that.

Just look at how beautifully the clustering has merged all the markings into results have combined all the markings into data that very precisely resembles what can be seen in the original data! The two fans in the lower right have been identified with stunning precision!

For an even more impressive display of this, have a look at the animated GIF below that allows you to track the visible fans, how they are being marked and how these markings are combined in a very precise representation of the object on the ground. It’s marvelous and I’m simply blown away by the quality of the data that we have received and how well this works!

This is not meant to say though that all is peachy and we can sit back and push some buttons to get these nice results. Sometimes they don’t look as nice as these, and we need to carefully balance the amount of work we invest into fixing those because we need to get the publication out into the world, so that all the Citizen scientists can see the fruit of their labor! And sometimes it’s not even clear to us if what we see is a fan or a blotch, but that distinction is of course only a mental help for the fact if there was wind blowing at the time of a CO2 gas eruption or not. So we have some ideas how to deal with those situations and that is one of the final things we are working on before submitting the paper. We are very close so please stay tuned and keep submitting these kind of stunningly precise markings!

For your viewing pleasure I finish with another example of how nicely the clustering algorithm works to create final markings for a PlanetFour image:

Geometry of HiRISE observations is on Talk!

Good news: our wonderful development team has added new feature that many of our volunteers have asked for! Now you can see north azimuth, sub-solar azimuth, phase angle, and emission angle on the Talk pages directly. You can see an example here. These angles give you information about how HiRISE took the image and where the Sun was at that moment.

To understand what those angles are, here is an illustration for you:

You see how the MRO spacecraft flies over the surface while HiRISE makes an image. The Sun illuminates the surface .

Consider a point on the martian surface P.

Emission angle: HiRISE does not necessarily look at point P straight down, i.e. the line connecting point P and HiRISE has some deviation from vertical line – it is noted as angle e on the sketch. This is emission angle. It tells you much we tilted spacecraft to the side to make the image.

Phase angle: Because all the images you see in our project is from polar areas, the Sun is often low in the sky when HiRISE observes. To get an idea on how low, we use phase angle – it is the angle between the line from Sun to the point P and line from point P to the HiRISE. It is noted φ in the sketch. The larger phase angle is, the lower the Sun in the sky, the longer are the shadows on the surface.

Sub-solar azimuth: To understand what is the direction towards the Sun in the frame of HiRISE image, we use sub-solar azimuth. In any frame that you see on our project it is an angle between horizontal line from the center of the frame towards right and the Sun direction. It is counted clock-wise. The notation for it in the sketch is a.

North azimuth: The orbit of MRO spacecraft defines orientations of HiRISE images. North azimuth tells us direction to the Martian north pole. In the frame of an image it’s counted same as sub-solar azimuth, i.e. from the horizontal line connecting center of the frame and its right edge in the clock-wise direction.

I hope this helps you enjoy exploring Mars with HiRISE!

Anya